by Nikhil Srivastava (senior scientist, Simons Institute for the Theory of Computing)

Expanders are sparse and yet highly connected graphs. They appear in many areas of theory: pseudorandomness, error-correcting codes, graph algorithms, Markov chain mixing, and more. I want to tell you about two results, proven in the last few months, that constitute a phase transition in our understanding of expanders.

Random graphs are Ramanujan with 69% probability

One way to quantify the expansion of a graph is via its adjacency spectrum. A \(d\)-regular graph is called Ramanujan if its nontrivial (i.e., other than \(d\)) adjacency eigenvalues are bounded in absolute value by \(2\sqrt{d-1}\). Alon and Boppana showed in 1986 that no infinite sequence of graphs can have nontrivial eigenvalues concentrated on a smaller interval, so Ramanujan graphs may be viewed as optimal spectral expanders as a function of \(d\).

The most important result about Ramanujan graphs is that they exist. Motivated by the Alon-Boppana bound, Lubotzky, Phillips, and Sarnak and independently Margulis famously constructed infinite sequences of Ramanujan graphs whenever \(d = p + 1\) for a prime number \(p\) in 1988. This is a gift that keeps on giving, as we will see below, albeit one whose proof very few of its recipients understand.

Until December 2024, that was the only known proof of the existence of Ramanujan graphs. Two results came close: Friedman’s theorem (and its improvements) that random \(d\)-regular graphs are almost Ramanujan with high probability, and the existence and construction of bipartite Ramanujan graphs (which are allowed to have an extra eigenvalue of -\(d\)) for all \(d\) via the interlacing families method. It remained mysterious whether the existence of Ramanujan graphs was a precious miracle or the consequence of some robust phenomena.

Huang, McKenzie, and Yau have shown that a random \(d\)-regular graph on \(N\) vertices is Ramanujan with probability approaching 69% as \(N\) \(\rightarrow\) \(\infty\). The fact that this probability does not approach 0 or 1 is strange in the context of the probabilistic method (as reflected in a bet of Noga Alon and Peter Sarnak) and rules out typical proof techniques like concentration inequalities. But it was anticipated about 20 years ago in a different context: the universality phenomenon in random matrix theory.

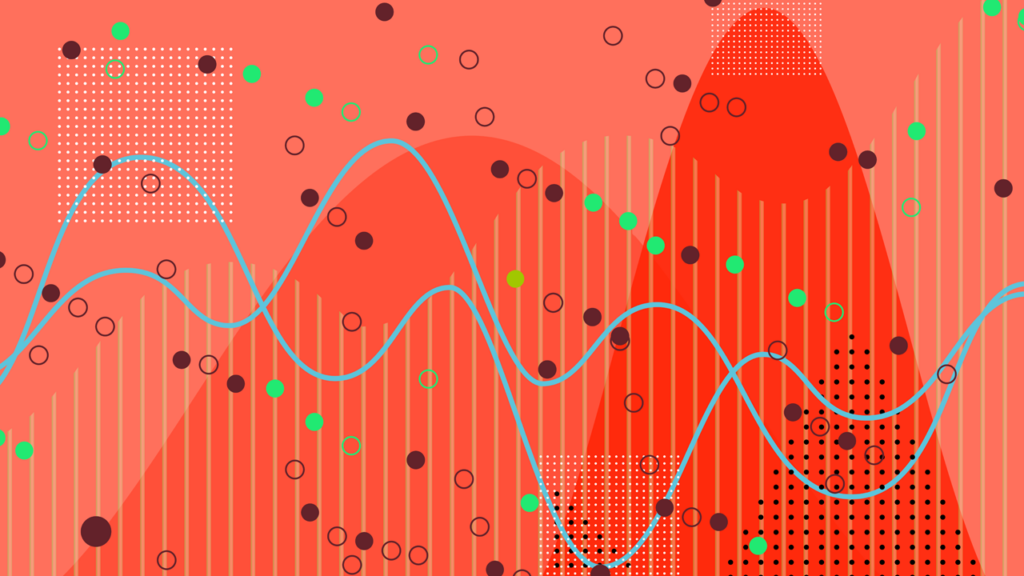

Universality is like the central limit theorem. It predicts (among other things) that the distribution of the top eigenvalue of an \(N\)\(\times\)\(N\) real symmetric random matrix with independent normalized entries should converge (after appropriate scaling) to a universal law, regardless of the details of the entries. That limit is the Tracy-Widom law, discovered in 1994. The TW law shows up in many contexts — as the top eigenvalue of Gaussian and Wigner random matrices, as the distribution of the length of the longest increasing subsequence in random permutations, and as the distribution of fluctuations in liquid crystal droplets which can be seen in experiments. It is surprising to me that something so deeply embedded in nature was discovered only 30 years ago, though the proposal of universality in random matrix theory goes back to Wigner in the 1950s (in the context of spacings of eigenvalues rather than top eigenvalues, which he used to model the energy gaps of heavy nuclei).

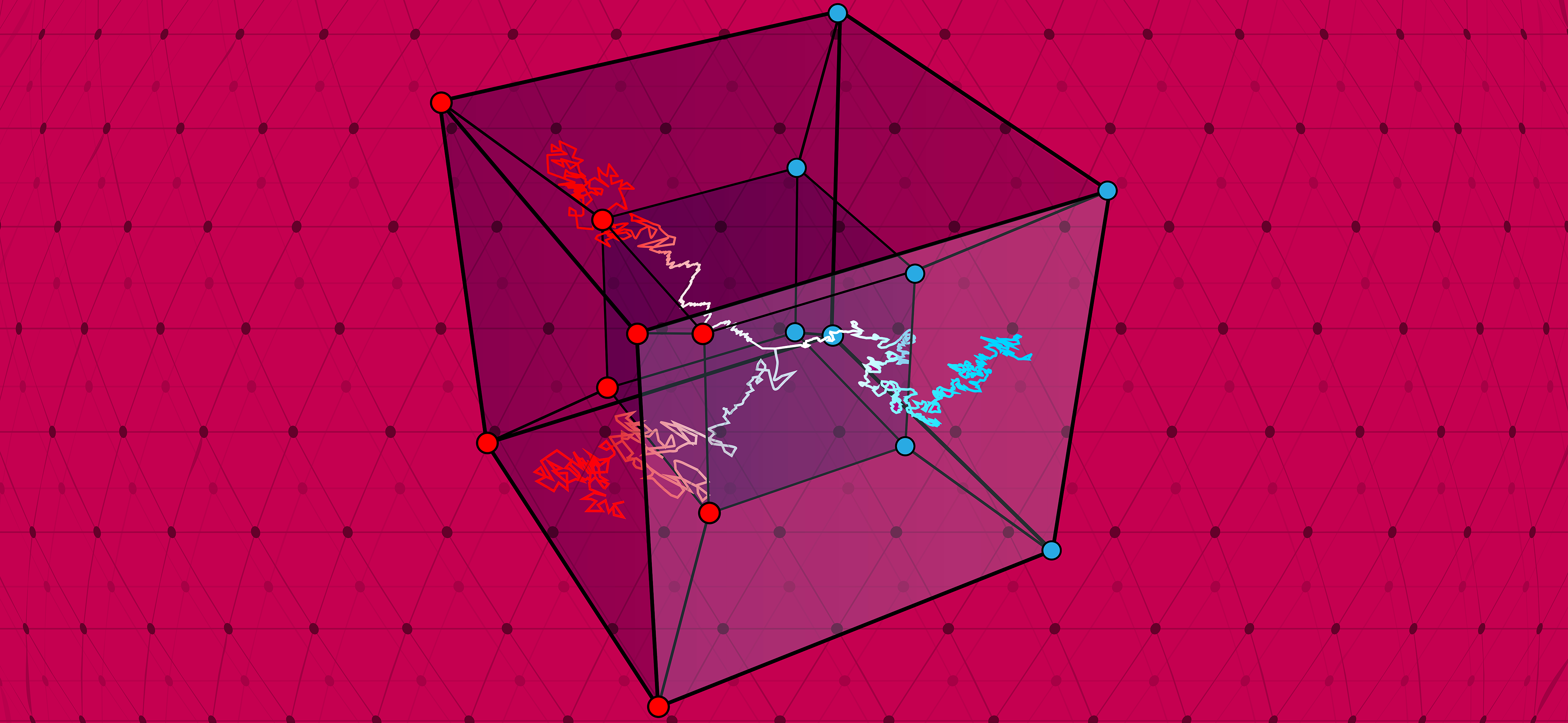

Numerical experiments by Novikoff, Miller, and Sabelli from around 2004 suggested that the extreme nontrivial eigenvalues of random \(d\)-regular graphs should have asymptotically independent Tracy-Widom fluctuations (which are of scale \(N^{-2/3}\)) centered near \(\pm\)\(2\sqrt{d-1}\). Conceptually, this would mean that the edge eigenvalue fluctuations of random \(d\)-regular graphs behave like those of Gaussian orthogonal ensemble (GOE) matrices. This is subtle because the same is definitely not true for sparse Erdős-Rényi graphs, whose extreme eigenvalues are dominated by high-degree vertices. Proving this rigorously would immediately imply a constant probability of being Ramanujan, a proof combining both concentration and anticoncentration of the extreme eigenvalues.

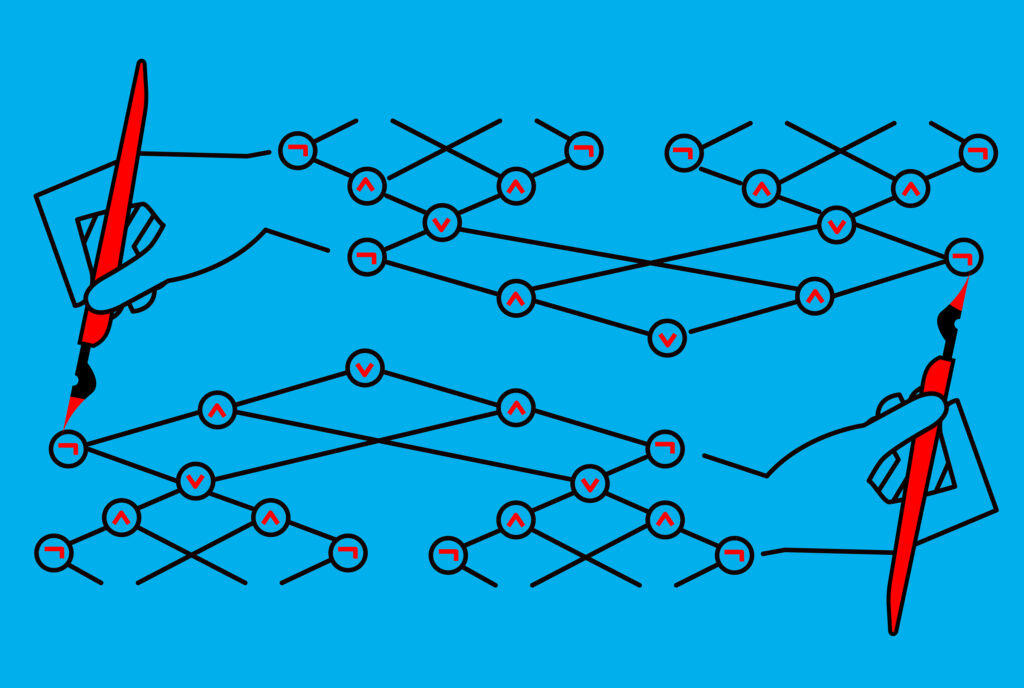

This is what Huang, McKenzie, and Yau achieve. Their approach is a variant of the “local relaxation flow” strategy for proving universality results, introduced by Erdős, Schlein, and Yau in 2009 and refined in dozens of papers since then (see also this book). Letting \(A\) denote the adjacency matrix of a random \(d\)-regular graph on \(N\) vertices, the three steps in this strategy are:

Continue reading