By Benjamin Rossman and Li-Yang Tan

Computational complexity theory is the branch of computer science that studies the nature and limits of efficient computation; an overarching goal in this area is the classification of computational problems according to their inherent difficulty. One of the most intensively studied such classifications is provided by the Polynomial Hierarchy, which was introduced by Albert Meyer and Larry Stockmeyer in 1972. This hierarchy classifies problems according to a natural notion of logical complexity, and is defined with an infinite number of levels: problems at the zeroth level are the “easiest”, and for every integer k, problems at the (k+1)-st level have logical complexity “one notch higher” than those at level k. In this vignette we describe some recent work done at the Simons Institute, in collaboration with Rocco Servedio of Columbia University, which sheds new light on the structure of this hierarchy.

The polynomial hierarchy begins at the zeroth level with the class P of problems solvable in polynomial time — the easiest problems. In theoretical computer science P captures an elegant and robust notion of computational efficiency: polynomial-time algorithms are considered efficient, and problems in P are considered tractable. Examples of fundamental problems in P include Linear Programming, Matching, and Primality. At the first level above P in the hierarchy is NP, the class of problems solvable in nondeterministic polynomial time, meaning that a “yes” answer can be verified efficiently. Well-known examples of problems in NP include the Boolean Satisfiability and Traveling Salesman problems, among countless others that arise in surprisingly diverse contexts.

Clearly P ⊆ NP, since the answer to a computational problem can be verified efficiently if it can be determined efficiently. But is the converse true? No efficient algorithms are known for the hardest problems in NP, the so-called NP-complete problems: while a “yes” answer to these problems can be verified in polynomial time, to date our best algorithms for solving them — determining whether the answer is “yes” or “no” — all run in exponential time. Indeed, the famous P ≠ NP conjecture asserts that there do not exist efficient algorithms for solving NP-complete problems: any algorithm for these problems must run in super-polynomial time. In other words, the P ≠ NP conjecture asserts that first two levels of the Polynomial Hierarchy are distinct.

To infinity and beyond

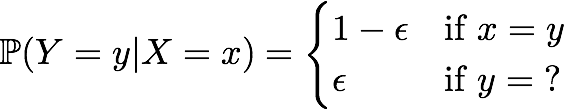

The Polynomial Hierarchy extends beyond P and NP to include an infinite number of classes of increasing logical complexity: just as NP generalizes P, the second level of the hierarchy generalizes NP, the third generalizes the second, and so on ad infinitum. To understand this hierarchy it is convenient to think of problems in NP as asking questions with a single existential quantifier. For example, the Boolean Satisfiability problem asks if there exists a satisfying assignment to a Boolean formula, and the Traveling Salesman problem asks if there exists a short tour visiting every city exactly once. Problems at the k-th level of the hierarchy allow for questions with not just one existential quantifier, but k alternating quantifiers:

Does there exist X, such that for all Y, there exists Z such that…?

CONTINUE READING