by Teresa Przytycka and Roded Sharan

The Spring 2016 Simons Institute program on Algorithmic Challenges in Genomics focused on three main research areas: Computational Cancer Biology, Regulatory Genomics and Epigenomics, and Network Biology. The different tracks allowed us, the long-term visitors, to better understand the relationships among the different areas and come up with ideas on how they can be integrated to gain further insights into biology. Here, we would like to tell the story of one such integration attempt that was initiated during the Simons program, where ideas from network biology led to new formulations and novel findings in cancer genomics.

A fundamental concept in cancer genomics is that of a disease module that contains functionally related genes that jointly drive the disease. The discovery of such gene sets can reveal the molecular mechanisms that underlie the disease and suggest potential therapeutic targets. It is generally assumed that cancer progresses through stages, starting from a precancerous lesion caused, for example, by DNA damage. If such damage cannot be properly handled by the cell repair mechanisms, for example when such mechanisms are compromised by mutations, an early stage of cancer emerges. In this state, the organism still relies on cellular mechanisms such as apoptosis, cell cycle arrest, or senescence, to guard against uncontrolled cell growth and proliferation. However, once those barriers are compromised, say by further mutations, the disease progresses. While the progression seems sequential, steered by driver mutations that provide a growth advantage to the evolving tumor cells, the actual process is more complex and not fully understood. To shed light on the progression process, we need not only to be able to identify disease modules, but also to understand the relationships among them.

A common approach to detecting cancer modules is to look for sets of genes that are mutually exclusive in the mutations they harbor, across a cohort of patients. The underlying assumption is that the module can be disrupted by a mutation in any of its member genes, thereby driving the disease progression, and additional mutations do not provide an advantage to the disease. As driver mutations are selected for during cancer evolution, the module’s genes often exhibit mutual exclusivity mutation patterns across different patients. Other inter-gene relations such as physical interactions, co-expression, functional similarity, and more, can inform the discovery of cancer modules. Thanks to public projects, such as The Cancer Genome Atlas, researchers can now access genomic data of thousands of patients across tens of cancer types. Such valuable data sources enable the systematic search of disease modules using different computational and statistical approaches [1].

While several research projects have tried to infer disease modules from mutation data, most have focused on mutual exclusivity relations. Only a few projects have considered co-occurrence relations, the integration of other types of data, or the modeling of inter-module relations. These more complex scenarios, however, are very common in network biology, where multiple relations can be modeled by multiple edge types in a gene network. The discovery of interesting edge-patterns can then be cast as a clustering problem where one aims to maximize within-cluster associations as well as between-cluster relations. It thus seemed natural to try and apply such general clustering formulations in the cancer genomics domain.

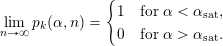

Multiple relations can exist within and between genes of true cancer modules. We already mentioned mutual exclusivity within modules (Figure, Panel A), but it can also be present between modules, representing different cancer subtypes or different cancer progression pathways (Figure, Panel B). Similarly, mutations can co-occur within modules, representing some shared mutagenic process, or between modules, representing a synergistic relation, where two alternative pathways need to be disrupted for the disease to occur (Figure, Panel C). Finally, known gene interactions or functional associations are typically within modules, representing their shared role in some molecular pathway driving the disease.

To capture all these different relations, Phuong Dao, Yoo-Ah Kim, Damian Wojtowicz, Sanna Madan and I modeled them within a general clustering framework that scores a given collection of modules by their within- and between-edges. The resulting clustering problem is reminiscent of cluster editing, also known as correlation clustering, where one wishes to minimally edit the edge set of a given graph so that it is transformed into a collection of disjoint cliques. While the problem is known to be NP-hard [2], it admits a practical and exact solution via integer linear programming [3]. Motivated by the success of the latter approach, we formulated the within-between module detection problem using integer linear programming. By applying a commercial solver (Cplex), we could solve the problem to optimality on current data sets, consisting of hundreds of mutated genes and thousands of relations across hundreds of patients, in less than an hour. The integrated network view of these relations made it possible to systematically test the contribution of the different types of relations, both within and between modules, to module discovery.

In an application to real data from The Cancer Genome Atlas project, we were able to form comprehensive maps of the modules underlying different cancers and gain novel insights about the roles of different genes in driving the disease. As an example, when maximizing the mutual exclusivity of mutations within modules and the co-occurrence of mutations between modules, we uncovered pairs of modules that work in a synergistic fashion, where the disruption of both is required for the disease to occur (Figure, Panel C). Overall, our general strategy that accounts for a number of complementary relations proved to be a valuable tool in dissecting the mutational landscape of cancer to infer molecular mechanisms that drive the disease.

References

- Y-A Kim, D-Y Cho, and TM Przytycka (2016). “Understanding Genotype-Phenotype Effects in Cancer via Network Approaches.” PLoS Comput Biol 12: e1004747.

- R Shamir, R Sharan, and D Tsur (2004). “Cluster graph modification problems.” Discrete Appl Math 144: 173–182.

- S Böcker S, S Briesemeister, and GW Klau (2009). “Exact Algorithms for Cluster Editing: Evaluation and Experiments.” Algorithmica 60: 316–334.